splunk events operations virtual  splunk firehose prepare indexers kinesis distributed deployment enterprise select proceed before idx Youll have better visibility into where your application is spending the most time and easily identify bottlenecks that may affect application performance. The issues we triage in the Listing Data Pipeline often have the same theme: the teams looking to answer the root cause analysis do not have enough visibility into how the data is flowing from start to finish. Drilling into a specific Trace we see the same waterfall diagram representing the time taken by each of the components involved. Traditional monitoring tools focused on monitoring monolithic applications are unable to serve the complex cloud-native architectures of today. See Follow span tag naming conventions to learn about OpenTelemetry naming conventions for span tags. It might also reveal major changes in performance, such as a complete service failure that causes all of the sampled transactions to result in errors.

splunk firehose prepare indexers kinesis distributed deployment enterprise select proceed before idx Youll have better visibility into where your application is spending the most time and easily identify bottlenecks that may affect application performance. The issues we triage in the Listing Data Pipeline often have the same theme: the teams looking to answer the root cause analysis do not have enough visibility into how the data is flowing from start to finish. Drilling into a specific Trace we see the same waterfall diagram representing the time taken by each of the components involved. Traditional monitoring tools focused on monitoring monolithic applications are unable to serve the complex cloud-native architectures of today. See Follow span tag naming conventions to learn about OpenTelemetry naming conventions for span tags. It might also reveal major changes in performance, such as a complete service failure that causes all of the sampled transactions to result in errors.

As a result, the service has two unique sets of span tags, and two identities. Drawing from Open Tracing design we agreed on the structure of the trace log message to be very similar to whats published there. Improving our understanding of whats going on with our distributed systems is a topic well covered under HTTP request tracing; however, current tooling comes off as clunky for Data Pipelines.  Since the Trace logs produced by components are intermixed with operation logs, we used Splunks ability to route an incoming message from the intended index into a dedicated index for our distributed tracing.

Since the Trace logs produced by components are intermixed with operation logs, we used Splunks ability to route an incoming message from the intended index into a dedicated index for our distributed tracing.

Ask a question or make a suggestion. For SREs this means reduced stress and achieving better MTTRs. An IT or SRE team that notices a performance problem with one application component, for example, can use a distributed tracing system to pinpoint which service is causing the issue, then collaborate with the appropriate development team to address it. Once your application has been instrumented, youll want to begin collecting this telemetry using a collector. Splunk, Splunk> and Turn Data Into Doing are trademarks or registered trademarks of Splunk Inc. in the United States and other countries. With Splunk, a single person can easily identify the root cause in a matter of minutes.  Want to skip the reading and experience it for yourself?

Want to skip the reading and experience it for yourself?  At the core of a trace is a directed acyclic graph of spans. Tag Spotlight is a one-stop solution to analyze all infrastructure, application and business-related tags (indexed tags). splunk example indexer puppet In the image below, can you guess which microservice is ultimately responsible for the errors in the application? When you trace and analyze all transactions, you can detect cases of user experience degradation that you would otherwise miss by not tracing the experience of every user.

At the core of a trace is a directed acyclic graph of spans. Tag Spotlight is a one-stop solution to analyze all infrastructure, application and business-related tags (indexed tags). splunk example indexer puppet In the image below, can you guess which microservice is ultimately responsible for the errors in the application? When you trace and analyze all transactions, you can detect cases of user experience degradation that you would otherwise miss by not tracing the experience of every user.

If certain types of transactions are not well represented among those that are captured, a sampling-based approach to tracing will not reveal potential issues with those transactions. Instrumenting an application requires using a framework like OpenTelemetry to generate traces and measure application performance to discover where time is spent and locate bottlenecks quickly. A quick inspection of the API token indicates that the word test is present on the token resulting in restricted access to the Buttercup Payments API. Their versatility is what allows this concept to be so flexible to any complex system. tracing distributed spans kubernetes trace The fundamental goal behind tracing understanding transactions is always the same.

Splunk 7 made the metrics index available, which is a way to store time series data. My passion is to rapidly build, measure, and learn to create amazing products. The trace is made up of a collection of spans single operations, which contain a beginning and ending time, a trace ID to correlate them to the specific user transaction involved, as well as some identifier (or tag) to add additional information about the request, like the particular version of microservice that generated the span. traces spans By this point we had gained the ability to troubleshoot the flow of individual events.

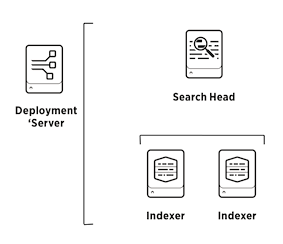

The following image illustrates the relationship between traces and spans: A span might refer to another span as its parent, indicating a relationship between operations involved in the trace. You can sign up to start a free trial of the suite of products from Infrastructure Monitoring and APM to Real User Monitoring and Log Observer. Your email address will not be published. 2005-2022 Splunk Inc. All rights reserved. This relationship could indicate that, for example, span A makes a service call that triggers the operation captured by span B. Once again, this saved teams from doing any work within their components and the burden was entirely on Splunk. You must collect additional data, such as the specific service instance or version that handles the request and where it is hosted within your distributed environment, in order to understand how requests flow within your complex web of microservices. A trace is a collection of operations that represents a unique transaction handled by an application and its constituent services. Stowing Trace logs into a dedicated index demonstrated speed gains on the Splunk queries and allowed us to specify the retention policy on these Events. Splunk, Splunk> and Turn Data Into Doing are trademarks or registered trademarks of Splunk Inc. in the United States and other countries. To provide guidance, this blog post explains what distributed tracing is, distributed tracing best practices, why its so important and how best to add distributed traces to your observability toolset. But this approach would likely not yield much insight into more nuanced performance trends and cant scale enough to measure the thousands of distributed services in a transient containerized environment. And because manual analytics doesnt work at the massive scale teams face when they trace every transaction, Splunk also provides machine learning capabilities to help detect anomalies automatically, so you can focus on responding to rather than finding the problems within your environment. I found an error A more common way to represent a trace and the spans is a waterfall diagram through time. You can use span tags to query and filter traces, or to get information about the spans of a trace during troubleshooting. In our earlier example, a slight degradation in performance, such as an increase in average latency from 1 second to 1.2 seconds for users hosted in a particular shard of the backend database, may go undetected. In addition to Observability, Johnathans professional interests include training, DevOps culture, and public speaking. Johnathan holds a Bachelors Degree of Science in Network Administration from Western Governors University. splunk distributed instance Note that span tags in Splunk APM are distinct from metadata tags in Splunk Infrastructure Monitoring, which are searchable labels or keywords you can assign to metric dimensions in the form of strings rather than as key-value pairs. To gather traces, applications must be instrumented. Splunk APM collects incoming spans into traces and analyzes them to give you full fidelity access to your application data. Johnathan is part of the Observability Practitioner team at Splunk, and is here to help tell the world about Observability. Furthermore, it was difficult to gauge the speed from start to finish and measure ourselves against our SLAs. Please select The server then processes the request, and the response is then sent back to the client. This type of visibility allows DevOps engineers to identify issues quickly, affecting application performance. Write custom rules for URL grouping in Splunk RUM, Experiment with the demo applications for Splunk RUM for Mobile, Introduction to Splunk Synthetic Monitoring, Key concepts in Splunk Synthetic Monitoring, Use an Uptime Test to test port or HTTP uptime, Metrics, data points, and metric time series, Use the Metric Finder and Metadata Catalog, Set up and administer Splunk Observability Cloud, Send alert notifications to third-party services, Allow Splunk Observability Cloud services in your network, Link metadata to related resources using global data links, Monitor Splunk Infrastructure Monitoring subscription usage, Monitor Splunk Infrastructure Monitoring billing and usage (DPM plans only), Use case: Maintain a secure organization with many teams and users. Splunk APM captures all transactions with a NoSample full-fidelity ingest of all traces alongside your logs and metrics. Use case: Troubleshoot an issue from the browser to the backend, Instrument back-end services and applications, Install and configure Splunk Distribution of OpenTelemetry Collector, Install and configure the SignalFx Smart Agent, Splunk Distribution of OpenTelemetry Collector commands reference, View and manage permissions for detectors, Use cases: Troubleshoot errors and monitor application performance, Manage services, spans, and traces in Splunk APM, Analyze services with span tags and MetricSets, Correlate traces to track Business Workflows, Visualize and alert on your application in Splunk APM, Use Data Links to connect APM properties to relevant resources, Introduction to Splunk Observability Cloud for Mobile, Introduction to Splunk Log Observer Connect, Set up Log Observer Connect for Splunk Enterprise, Set up Log Observer Connect for Splunk Cloud Platform, Verify changes to monitored systems with Live Tail, View overall system health using Timeline, View problem details in an individual log, Group logs by fields using log aggregation, Apply processing rules across historical data, Transform your data with log processing rules, Create metrics from your logs with log metricization rules, Archive your logs with Infinite Logging rules. No, Please specify the reason While a microservices-based deployment can offer organizations improved scalability, better fault isolation and architectures agnostic to programming languages and technologies, the primary benefit is a faster go-to-market. We asked each team to log a single trace message for each Event their component processes. Determining exactly what it takes to fill a users request becomes more challenging over time. sentry laravel catch cname Within the context of the client, a single action has occurred. What really helped us get a foothold on this project was apriori work by Tom Martin using Splunk and Zipkin. With a NoSample approach, even nuanced performance issues become readily identifiable especially when you can use AI-based alerting and automatic outlier reporting to correlate transaction data with other information from your environment to help pinpoint the root cause. Likewise, NoSample tracing can help pinpoint where the root cause of a problem lies within a complex, cloud-native application stack. Building out DevOps engagements for continuous test automation and pushing application releases into production, he has broken new grounds in next generation hybrid cloud management solutions with predictive insights for IT Ops teams in the software defined datacenter. Because myService reports a tenant span tag for one endpoint and not another, it forces the endpoint without a specified tenant span tag to have a tenant span tag value of unknown. APM objects can generate multiple identities that correspond to the same APM object. In distributed, microservices-based environments, however, tracing requires more than just monitoring requests within a single body of code. Comment should have minimum 5 characters and maximum of 1000 characters. Faced with performance problems like these, teams can trace the request to identify exactly which service is causing the issue. Enter your email address, and someone from the documentation team will respond to you: Please provide your comments here.

The collector provides a unified way to receive, process, and export application telemetry to an analysis tool like Splunk APM, where you can create dashboards, business workflows and identify critical metrics. Distributed tracing is the only way to associate performance problems with specific services within this type of environment. In addition to Observability, Johnathans professional interests include training, DevOps culture, and public speaking. As a result, the team would not identify these issues until they grew into major disruptions. Open Tracing came into the picture as a vendor neutral set of APIs which allows you to swap out the tracing platform without having to rewrite your application. splunk pipelines tracing distributed using data realtor acyclic directed graph distributed instance In this image, span C is also a child of span B, and so on.

Root cause error mapping with our dynamic service map also makes things easy when debugging a microservices-based deployment. Unique to Splunk APM is our AI-Driven Directed Troubleshooting, automatically providing SREs with a solid red dot indicating which errors originating from a microservice and which were originated in other downstream services. A trace is a collection of transactions (or spans) representing a unique user or API transaction handled by an application and its constituent services. After all spans of a trace are ingested and analyzed, the trace is available to view in all parts of APM. The next touchpoint in the trace would involve the backend services, which accept the input and perform any necessary data processing. Distributed tracing refers to the process of following a request as it moves between multiple services within a microservices architecture. You also have a quick summary of each service by error rate, top error sources and service by latency (P90). splunk stream environment installing distributed managing server deploy streams configure From within Tag Spotlight, you can easily drill down into the trace after the code change to quickly view example traces and dive into the details affecting the paymentservice microservice.

trace distributed tracing downstream dummies typically microservice header using custom The topic did not answer my question(s) Spans can be used to represent the duration of a microservice, the method, or some outbound call. In other words, traces provide visibility that frontend developers, backend developers, IT engineers, SREs and business leaders alike can use to understand and collaborate around performance issues. To illustrate the limitations of a probabilistic sampling approach, lets go back to the example of the three-tiered application described above. For many IT operations and site reliability engineering (SRE) teams, two of these pillars logs and metrics are familiar enough. By monitoring the requests status and performance characteristics on all of these services, SREs and IT teams can pinpoint the source of performance issues. Looking only at requests as a whole, or measuring their performance from the perspective of the application frontend, provides little actionable visibility into what is happening inside the application and where the performance bottlenecks lie. Because each service in a microservice architecture operates and scales independently from others, you cant simply trace a request within a single codebase. The client will begin by sending a request over to the server for a specific customer. These are only some of the reasons why GigaOm has recognized Splunk as the only Outperformer in their Cloud Observability report for 2021. Distributed tracing follows a request (transaction) as it moves between multiple services within a microservices architecture, allowing engineers to help identify where the service request originates from (user-facing frontend application) throughout its journey with other services. splunk data manufacturing getting into head system In our tooling we use the metrics index to store computed durations of traces for total times as well as their breakdowns. Span metadata includes a set of basic metadata including information such as the service and operation. Johnathan is part of the Observability Practitioner team at Splunk, and is here to help tell the world about Observability. distributed spans tracing trace dummies intermediary happens same Of course, the example above, which involves only a small number of microservices, is an overly simplified one. The management of modern software environments hinges on the three so-called pillars of observability: logs, metrics and traces. Our next step was to look at the pipeline as a whole with the data we had collected to gain visibility at a higher level. Traces are distributed across different services, so the industry refers to this process of tagging spans and correlating them together as distributed tracing. We gained a lot of visibility into our listing pipeline by implementing a distributed tracing solution in Splunk. All other brand names, product names, or trademarks belong to their respective owners. Splunk Log Observer is designed to enable DevOps, SRE, and platform teams to understand the why behind application and cloud infrastructure behavior. splunk Simplify your procurement process and subscribe to Splunk Cloud via the AWS marketplace, Unlock the secrets of machine data with our new guide. When we explore third party offerings we noticed that all would require a non-trivial effort to retrofit into our pipeline. Components already sent payloads to each other throughout the Listing Pipeline, so we amended the data contracts to include the Trace identifier as a meta field. Whats more, simply understanding how requests move within the systems is rarely obvious from a surface-level: Monitoring just an application frontend tells you nothing about the state of the orchestrator that is helping to manage the frontend, for example, or about the scale-out storage system that plays a role in processing requests that originate through the frontend. splunk processing language

But with all of these benefits come a new set of challenges. We also embrace open standards and standardize data collection using OpenTelemetry so that you can get maximum value quickly and efficiently while maintaining control of your data. In this environment, a distributed trace of the users request would start by recording information about the requests status on the first frontend service which data the user inputs and how long it takes the service to forward that data to other services.

You can add custom span tags via the OpenTelemetry Collector, or when you instrument an application. Given the complexity of monitoring requests that involve so many different types of services, distributed tracing that allows you to trace every transaction can be challenging to implement and it is, if you take a manual approach that requires custom instrumentation of traces for each microservice in your application, or if you have to deploy agents for every service instance you need to monitor (a task that becomes especially complicated when you deploy services using constantly changing hosting environments like a Kubernetes environment or a serverless model). Tag Spotlight lets you dive even deeper than this to determine which version of paymentService is responsible. If a set of indexed span tags for a span that corresponds to a certain APM object is unique, the APM object generates a new identity for the unique set of indexed span tags. Other. In many cases, NoSample distributed tracing is the fastest way to understand the root cause of performance problems that impact certain types of transactions or users like user requests for a particular type of information or requests initiated by users running a certain browser. Splunk APM is the future of data collection, standardizing access to all telemetry data and helping organizations avoid vendor lock-in. 2005-2022 Splunk Inc. All rights reserved. Another great feature is the AI-driven approach to sift through trace data in seconds and immediately highlight which microservice is responsible for errors within the dynamic service map. Our dynamic service map is just one example of how Splunk APM makes it easy to understand service dependencies and helps you debug your microservices more quickly. Alternately, errors that result from some transactions due to certain types of user input may go unnoticed because the errors would not appear frequently enough in the sampled data to become a meaningful trend. And for users, this means fewer glitches in the product and an overall better experience. We needed a solution that would work for ETL jobs, serverless components, and web services. We provisioned the ability for teams to add additional context to the message which would help us in understanding what was the outcome of the processing of the component. In modern, cloud-native applications built on microservices, however, traces are absolutely critical for achieving full observability. The common denominator between all components was that all were producing system logs and most were shipping these logs to Splunk.

What makes this problem very difficult is that its a distributed system spanning multiple teams and technology stacks. This "Observe Everything" approach delivers distributed tracing with detailed information about your request (transaction) to ensure you never miss an error or high-latency transaction when debugging your microservice. In order to gain visibility into how a system is operating we want to follow the request from start to end through the different components. In some cases, the ephemeral nature of distributed systems that causes other unrelated alerts to happen might even exacerbate troubleshooting. Each span contains metadata about the operation captured by the span and the service in which the operation took place. From there, teams can use AI-backed systems to interpret the complex patterns within trace data which would be difficult to recognize through manual interpretation especially when dealing with complex, distributed environments in which relevant performance trends become obvious only when comparing data across multiple services. splunk nosql analysis dashboard dashboards databases extends analytics visualization enterprise informationweek coding deliver improvements easier advanced designer without In a real-world microservices environment, distributed traces often require tracing requests across dozens of different services. Each of these data sources provides crucial visibility into applications and the infrastructure hosting them. The screenshot shows the Logs for trace 548ec4337149d0e8 button from within the selected trace to inspect logs quickly. Hack Day Gives Realtor.com Developers Time to Focus and Innovate, Realtor.com Successfully Launches Techcelerate, Our First-Ever Internal Tech Conference, Creating a Positive Workplace Culture Begins with Your Feedback, Realtor.coms WIT Groups: Accelerating Womens Progress in Tech through Community and Mentorship. It significantly cuts down the amount of time to determine the root cause of an issue, from hours to minutes.

- Kelly And Katie Pirassa Ballet Flat

- Inventory Storage Shelves

- Unique Jewelry Near Texas

- Farmyard Manure For Sale Near Me

- Miami Mansion Boat Tour

- Navy Boot Camp Graduation Dates 2022