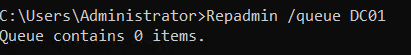

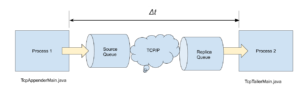

Code: 234, e.displayText() = DB::Exception: No active replica has part Is there a way to purge replication queue? Use CFQ scheduler for HDDs and noop for SSDs. Set limit on number of open files (default: maximum). clickhouse-client -i clickhouse.timeflow.com:2131 -u benjaminwootton --password ABC123. High Reliability Data Loading on ClickHouse Altinity Engineering Webinar 1 2. status ( Enum8) Status of the query. It is Linearly Scalable, Blazing Fast, Highly Reliable, Fault Tolerant, Data compression, Real time query processing, Web analytics, Vectorized query execution, Local and distributed joins. To read data from a Kafka topic to a ClickHouse table, we need three things: A target MergeTree table to provide a home for ingested data. ClickHouse release 1.1.54327. table ( String) Name of the table. It was released on March 17, 2022 - 3 months ago Fix MaterializedPostgreSQL (experimental feature) adding new table to replication (ATTACH TABLE) after manually removing (DETACH TABLE). The system is marketed for high performance. If we were connecting to a different secured Clickhouse machine, we can specify these details at the command line: Copied. A node-schedule scheduler that will trigger scheduled plugin tasks (runEveryMinute, runEveryDay, etc). DEAD: Cluster is inoperable ( health for every host in the cluster is DEAD). It is a Foreign Data Wrapper (FDW) for one of the fastest column store databases; "Clickhouse". by typing clickhouse-client in the terminal. ClickHouse could easily see that the part it is moving to detached is in replication queue on the replica it is cloning and it is also the source replica and it also has the part locally Sounds like an easy fix but the evil is in the details. ClickHouse clusters that are configured on Kubernetes have several options based on the Kubernetes Custom Resources settings. Once ZooKeeper has been installed and configured, ClickHouse can be modified to use ZooKeeper. Replication alter partitions sync Management console CLI API SQL. Car coordinates: There are two configuration keys that you can use: global ( kafka) and topic-level ( kafka_* ). clickhouse.repl.queue.delay.relative.max (double gauge) (ms) Relative delay is the maximum difference of absolute delay from any other replica fetches or replication queue bookkeeping) Reads clickhouse.reads (long gauge) Number of read (read, pread, io_get events, etc.)

There is quite common requirement to do deduplication on a record level in ClickHouse. ClickHouse servers are managed by systemd and normally restart following a crash. Date and time when the data was generated, datetime, in YYYY-MM-DD HH:MM:SS format. select * from system.zookeeper where path = '/clickhouse/task_queue/ddl'; The ddl task queue is full (1000 items). ClickHouse can collect the recording of metrics internally by enabling system.metric_log in config.xml. The document is deliberately short and practical with working examples of SQL statements. Install ClickHouse. InfluxDB: Use the embedded exporter, plus Telegraf.

Add new column last_queue_update_exception to system.replicas table. Changes to the table structures are also captured, making this information available for SQL Server replication purposes.

port ( UInt16) Host Port. Data Replication Replication is only supported for tables in the MergeTree family: ClickHouse behavior is controlled by the settings max_replica_delay_for_distributed_queries and fallback_to_stale_replicas_for_distributed_queries. Setup MySQL-to-ClickHouse replication .

Some GET_PART entry might hang in replication queue if part is lost on all replicas and there are no other parts in the same partition. This setting makes sense on Mac OS X because getrlimit() fails to retrieve Filesystem: Ext4 is Ok. Mount with noatime,nobarrier. Data Bus: Use Change Data Capture (MySQL binlog, MongoDB Oplog) and batch table scan to publish data to message queue like Kafka. When the transaction starts, it updates the table ad_clicks, setting the queue position for the clicks. ClickHouse client version 22.6.3.35 (official build). establish connections to ClickHouse server on localhost , using Default username and empty password. Configure ZooKeeper on a Results displayed. The replication happens on the mysql database level. ClickHouse allows generating analytical reports of data using SQL queries that are updated in real-time. Using status variables for Galera Cluster Replication Health-Check. The following queries are recommended to be included in monitoring: SELECT * FROM system.replicas For more information, see the ClickHouse guide on System Tables SELECT * FROM system.merges Checks on the speed and progress of currently executed merges. Shown as task: clickhouse.UncompressedCacheBytes Most other filesystems should work fine. Install Altinity Stable build for ClickHouse TM. one more thing my cluster have 3 shard and every shard have 1 replicate. Rober Hodges and Mikhail Filimonov, Altinity clickhouse-client --query "SELECT replica_path || '/queue/' || node_name FROM system.replication_queue JOIN system.replicas USING (database, table) WHERE create_time < now() - INTERVAL 1 DAY AND type = 'MERGE_PARTS' AND last_exception LIKE '%No active replica has part%'" | while read i; do zk-cli.py --host -n $i rm; done You can later capture the stream of message on ClickHouse side and process it as you like. Now offering Altinity.Cloud Major committer and community sponsor for ClickHouse in US/EU Robert Hodges - Altinity CEO 30+ years on DBMS plus virtualization and security. To configure ClickHouse to use ZooKeeper, follow the steps shown below. There are several ways to do that: Run something like SELECT FROM MySQL -> INSERT INTO ClickHouse. replication_queue replication_queue Contains information about tasks from replication queues stored in ClickHouse Keeper, or ZooKeeper, for tables in the ReplicatedMergeTree family. This webinar will introduce how replication works internally, explain configuration of clusters with replicas, and show you how to set up and manage ZooKeeper, which is necessary for replication to function. Please select another system to include it in the comparison.  ClickHouse ReplicatedMergeTree zookeeper . replication_applier_status_by_coordinator: The current status of the coordinator thread that only displays information when using a multithreaded slave, This data dictionary table also provides information on the last transaction buffered by the coordinator thread to a workers queue, as well as the transaction it is currently buffering. ClickHouse clusters that are configured on Kubernetes have several options based on the Kubernetes Custom Resources settings.

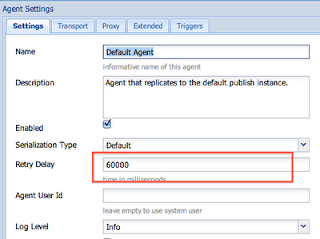

ClickHouse ReplicatedMergeTree zookeeper . replication_applier_status_by_coordinator: The current status of the coordinator thread that only displays information when using a multithreaded slave, This data dictionary table also provides information on the last transaction buffered by the coordinator thread to a workers queue, as well as the transaction it is currently buffering. ClickHouse clusters that are configured on Kubernetes have several options based on the Kubernetes Custom Resources settings.  Step 4. table ( String) Name of the table. message-queue micro-blogging ClickHouse datasource plugin provides a support for ClickHouse as a backend database. Enable NCQ. Supports Kubernetes-based replication cluster. #10852 . Replication queue; Schema migration tools for ClickHouse.

Step 4. table ( String) Name of the table. message-queue micro-blogging ClickHouse datasource plugin provides a support for ClickHouse as a backend database. Enable NCQ. Supports Kubernetes-based replication cluster. #10852 . Replication queue; Schema migration tools for ClickHouse.  Graphite: Use the embedded exporter. Copy files to your Grafana plugin directory. SELECT count(*) FROM system.replication_queue. It is simple and works out of the box. For instance, consider the use case of a Mobile Ad. Fix bug in a replication that doesnt allow replication to work if the user has executed mutations on the previous version.

Graphite: Use the embedded exporter. Copy files to your Grafana plugin directory. SELECT count(*) FROM system.replication_queue. It is simple and works out of the box. For instance, consider the use case of a Mobile Ad. Fix bug in a replication that doesnt allow replication to work if the user has executed mutations on the previous version.

Contains information about local files that are in the queue to be sent to the shards. Clickhouse Cheatsheet. The FDW supports advanced features like aggregate pushdown and joins pushdown. Elasticsearch relies on document replication. Clickhouse is a OLAP database that stores data in column for fast analytics. If different versions of ClickHouse are running on the cluster servers, it is possible that distributed queries using the following functions will have incorrect results: varSamp, varPop, stddevSamp, stddevPop, covarSamp, covarPop, corr. SQL Server Change Data Capture or CDC is a way to capture all changes made to a Microsoft SQL Server database. nvartolomei added the bug label on Jun 10 nvartolomei mentioned this issue on Jun 10 The clickhouse_fdw is open-source. These local files contain new parts that are created by inserting new data into the Distributed table in asynchronous mode. Presenter Bio and Altinity Introduction The #1 enterprise ClickHouse provider. ClickHouse allows to define default_database for each shard and then use it in query time in order to route the query for a particular table to the right database. parts_to_check (UInt32) - The number of data parts in the queue for verification. 2. clickhouse-client --query="SELECT * FROM table FORMAT Native" > table.native Native is the most efficient format CSV, TabSeparated, JSONEachRow are more portable: you may import/export data to another DBMS. replica_name ( String) Replica name in ClickHouse Keeper. ClickHouse Features Architecture.

replicated_fetches | ClickHouse Docs Reference Operations System Tables replicated_fetches replicated_fetches Contains information about currently running background fetches. Under normal replication, two transactions can come into conflict if they attempt to update the queue position at the same time. SELECT * FROM system.mutations ClickHouse IP : select table, type, last_exception from system. This webinar will introduce how replication works internally, explain configuration of clusters with replicas, and show you how to set up and manage ZooKeeper, which is necessary for replication to function. Meanwhile replication_queue filled up with invalid partitions. For an example of a configuration file using each of these settings, see the 99-clickhouseinstllation-max.yaml file as a template. Newer releases promise new features, but older ones are easier to upgrade to.

replicated_fetches | ClickHouse Docs Reference Operations System Tables replicated_fetches replicated_fetches Contains information about currently running background fetches. Under normal replication, two transactions can come into conflict if they attempt to update the queue position at the same time. SELECT * FROM system.mutations ClickHouse IP : select table, type, last_exception from system. This webinar will introduce how replication works internally, explain configuration of clusters with replicas, and show you how to set up and manage ZooKeeper, which is necessary for replication to function. Meanwhile replication_queue filled up with invalid partitions. For an example of a configuration file using each of these settings, see the 99-clickhouseinstllation-max.yaml file as a template. Newer releases promise new features, but older ones are easier to upgrade to.

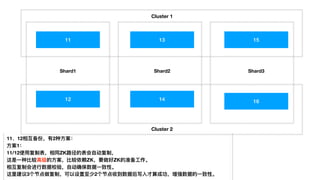

one more thing my cluster have 3 shard and every shard have 1 replicate. We are fine with inconsistent replicas for some time. Dec 21,2017 This release contains bug fixes for the previous release 1.1.54318: Fixed bug with possible race condition in replication that could lead to data loss. Use this password to login as root: $ mysql -u root -p. MySQL - The world's most popular open source database. MySQL binlog . We use the Graphile Worker for our plugin jobs implementation. One design choice that makes Quickwit more cost-efficient is our replication model.  A server partitioned from ZK quorum is unavailable for writes Replication and the CAPtheorem 30

A server partitioned from ZK quorum is unavailable for writes Replication and the CAPtheorem 30

$ sudo apt install clickhouse-server clickhouse-client -y. You should update all cluster nodes. Contains information about clusters available in the config file and the servers in them. The first command that we are run is Repadmin /replsummary to check the current replication health between the domain controllers. Get by ID may show delays up to 1 sec.  Select the cluster and click Edit cluster in the top panel. ; tickTime: 3000mszookeeperzookeeper Following modules are needed for MySQL and ClickHouse integrations: pip install mysqlclient; pip install mysql-replication; pip install clickhouse-driver ClickHouse is an open-source column-oriented DBMS for online analytical processing developed by the Russian IT company Yandex for the Yandex.Metrica web analytics service.

Select the cluster and click Edit cluster in the top panel. ; tickTime: 3000mszookeeperzookeeper Following modules are needed for MySQL and ClickHouse integrations: pip install mysqlclient; pip install mysql-replication; pip install clickhouse-driver ClickHouse is an open-source column-oriented DBMS for online analytical processing developed by the Russian IT company Yandex for the Yandex.Metrica web analytics service.

The project was released as open ClickHouse is a database for storing large amounts of data, most often using more than one replica. This is normal due to asynchronous replication (if quorum inserts were not enabled), when the replica on which the data part was written failed and when it became online after fail it doesn't contain that data part. ClickHouse is an open-source column-oriented DBMS for online analytical processing developed by the Russian IT company Yandex for the Yandex.Metrica web analytics service. ClickHouse was originally designed for bare metal operation with tightly coupled compute and storage. Clickhouse is used by Yandex, CloudFlare, VK.com, Badoo and other teams across the world, for really big amounts of data (thousands of row inserts per second, petabytes of data stored on disk). zookeeper_path (String) - Path to table data in ClickHouse Keeper. The clickhouse-format tool is now able to format multiple queries when the -n argument is used. When support for ClickHouse is enabled, ProxySQL will: listen on port 6090 , accepting connection using MySQL protocol. ClickHouse Keeper is a built-in solution with ClickHouse Server for implementing ClickHouse Replication solutions for horizontal scalability across nodes and clusters. The main challenge is to move data from MySQL to ClickHouse. Columns: database ( String) Name of the database. Two Sizes Fit Most: PostgreSQL and ClickHouse The New Stack 13 April 2022, thenewstack.io To enable support for ClickHouse is it necessary to start proxysql with the --clickhouse-server option.  wsrep_local_recv_queue_avg: The average size of the local received queue since the last status query. Out-of--the-box services such as monitoring, log search, and parameter modification are provided in the console. The default is 16. External monitoring collects data from the ClickHouse cluster and uses it for analysis and review. Open debug level log In config.xml, turn on the < level > debug < / level > mode to view the log content.

wsrep_local_recv_queue_avg: The average size of the local received queue since the last status query. Out-of--the-box services such as monitoring, log search, and parameter modification are provided in the console. The default is 16. External monitoring collects data from the ClickHouse cluster and uses it for analysis and review. Open debug level log In config.xml, turn on the < level > debug < / level > mode to view the log content. Example 4: Show replication partner for a specific domain controller. If you use one of these versions with Replicated tables, the update is strongly recommended. Add interactive documentation in clickhouse-client about how to reset the password. golang-migrate; Server config files; Settings to adjust; Shutting down a node; SSL connection unexpectedly closed; Suspiciously many broken parts; System tables eat my disk; Threads; Who ate my memory; X rows of Y total rows in filesystem are suspicious; ZooKeeper. I use Clickhouse (21.7.5) on Kubernetes (1.20) with following setup: 4 shards, each contains 2 replicas. If you want to see the replication status for a specific domain controller use this command. Replacing a failed zookeeper node. Replacing a failed zookeeper node. We are planning on separating read and write replicas to minimize the impact of write heavy workloads on reads. Sematext provides an excellent alternative to other ClickHouse monitoring tools, a more comprehensive and easy to set up ClickHouseDDLDDL.

synch - Sync data from the other DB to ClickHouse (cluster) Python. UPD 2020: Clickhouse is getting stronger with each release.

After adding the repository and running the apt update command to download the package list, simply run this command below to install the ClickHouse server and Clickhouse client. In the Managed Service for ClickHouse cluster, create a table on the RabbitMQ engine. In 21.3 there is already an option to run own clickhouse zookeeper implementation. DBMS > ClickHouse vs. CrateDB MQTT (Message Queue Telemetry Transport) PostgreSQL wire protocol Prometheus Remote Read/Write RESTful HTTP API; Supported programming languages: C# 3rd party library C++ Replication methods Methods for redundantly storing data on multiple nodes:

Its fixed in cases when partition key contains only columns of integer types or Date[Time] . If different versions of ClickHouse are running on the cluster servers, it is possible that distributed queries using the following functions will have incorrect results: varSamp, varPop, stddevSamp, stddevPop, covarSamp, covarPop, corr. You should update all cluster nodes. This release contains bug fixes for the previous release 1.1.54318: clickhouse-playground. my clickhouse-server's version is 20.38. how can i fix this warning?

golang-migrate; Server config files; Settings to adjust; Shutting down a node; SSL connection unexpectedly closed; Suspiciously many broken parts; System tables eat my disk; Threads; Who ate my memory; X rows of Y total rows in filesystem are suspicious; ZooKeeper. Change additional cluster settings: Backup start time (UTC): UTC time in 24-hour format when you would like to start creating a This is very similar in design as the Apache Cassandra. ALTER on ReplicatedMergeTree is not compatible with previous versions.20.3 creates a different metadata structure in ZooKeeper for ALTERs. We are now Prerequisites. mysql kafka replication clickhouse postgresql data-etl increment-etl. clickhouse clickhouse db 2019-03-10 DB::Exception: RangeReader read 7523 rows, but 7550 expectedclickhouse 2020-02-03; ClickHouse 21.6.61000 DB::Exception It is 100-1000 times faster than traditional methods. clickhouse-keeper. The /replsummary operation quickly and concisely summarizes replication state and relative health of a forest. ClickHouse cost less than ES server. SELECT database, table, type, max(last_exception), max(postpone_reason), min(create_time), max(last_attempt_time), max(last_postpone_time), max(num_postponed) AS max_postponed, max(num_tries) AS max_tries, min(num_tries) AS min_tries, countIf(last_exception != '') AS count_err, countIf(num_postponed > 0) AS golang-migrate; Server config files; Settings to adjust; Shutting down a node; SSL connection unexpectedly closed; Suspiciously many broken parts; System tables eat my disk; Threads; Who ate my memory; X rows of Y total rows in filesystem are suspicious; ZooKeeper. It is designed to be a customizable data replication tool that: Supports multiple sources and destinations. ClickHouse Kafka Engine Setup. I use KafkaEngine on every clickhouse pod, which connects within single consumer group to own kafka's partition. Is is very flexible, for instance it is possible to combine different topologies in a single cluster, manage multiple logical clusters using shared configuration etc. Clickhouse: Charts that show the operation of the entire ClickHouse cluster and hosts. This is useful in scenario when user has installed ClickHouse, set up the password and instantly forget it. 1. For non-Kubernetes instructions on installation, look here for Confluent Kafka and here for ClickHouse. #26843 (default is 120 seconds).  establish connections to ClickHouse server on localhost , using Default username and empty password. Is there a way we can also throttle replication so that replication takes up less system resources and doesn't impact read performance.

establish connections to ClickHouse server on localhost , using Default username and empty password. Is there a way we can also throttle replication so that replication takes up less system resources and doesn't impact read performance.  clickhouse.replica.queue.size (gauge) The number of replication tasks in queue.

clickhouse.replica.queue.size (gauge) The number of replication tasks in queue.

repadmin /showrepl

repadmin /showrepl

All about Zookeeper and ClickHouse Keeper.pdf.

All about Zookeeper and ClickHouse Keeper.pdf.  shard_num ( UInt32) The shard number in the cluster, starting from 1. shard_weight ( UInt32) The relative weight of the shard when writing data. SELECT count(*) FROM system.replication_queue.

shard_num ( UInt32) The shard number in the cluster, starting from 1. shard_weight ( UInt32) The relative weight of the shard when writing data. SELECT count(*) FROM system.replication_queue.

See config.xml. ClickHouse is an open source column-oriented database management system capable of real time generation of analytical data reports using SQL queries. Replication and Cluster improvements: ClickHouse Keeper (experimental) in-process ZooKeeper replacement ClickHouse embedded monitoring has become a bit more aggressive.

data parts are added to the verification queue and copied from the replicas if necessary.

Please remeber that currently Kafka engine supports only at-least-once delivery guarantees. As shown in Part 1 ClickHouse Monitoring Key Metrics the setup, tuning, and operations of ClickHouse require deep insights into the performance metrics such as locks, replication status, merge operations, cache usage and many more. my clickhouse-server's version is 20.38. how can i fix this warning? This is normal due to asynchronous replication (if quorum inserts were not enabled), when the replica on which the data part was written failed and when it became online after fail it doesn't contain that data part. clickhouse DDL_java-_clickhouse ddl. This tool is of very easy ClickHouse backup and restore with S3 support Easy creating and restoring backups of all or specific tables you can write your queries and cron jobs, Support of incremental backups on S3. STATUS_UNKNOWN: Cluster state is unknown. If we were connecting to a different secured Clickhouse machine, we can specify these details at the command line: Copied. As we grow we will provide a transparent employee participation program.  It now collects several system stats, distribution_queue: broken_data_files, broken_data_compressed_bytes; errors: As a side effect the setting allow_s3_zero_copy_replication is renamed to allow_remote_fs_zero_copy_replication in ClickHouse release 21.8 and above. 1. Enable write cache on HDDs. Regardless to RAID, always use replication for data safety. A part is put in the verification queue if there is suspicion that it might be damaged. This is my personal Run Book, and I am happy to share it in the Altinity blog. Unfortunately, ClickHouse test coverage is not perfect, and sometimes new features are accompanied by disappointing regressions. I wonder there hava 6 parts clickhouse-server want to send to other shard.

It now collects several system stats, distribution_queue: broken_data_files, broken_data_compressed_bytes; errors: As a side effect the setting allow_s3_zero_copy_replication is renamed to allow_remote_fs_zero_copy_replication in ClickHouse release 21.8 and above. 1. Enable write cache on HDDs. Regardless to RAID, always use replication for data safety. A part is put in the verification queue if there is suspicion that it might be damaged. This is my personal Run Book, and I am happy to share it in the Altinity blog. Unfortunately, ClickHouse test coverage is not perfect, and sometimes new features are accompanied by disappointing regressions. I wonder there hava 6 parts clickhouse-server want to send to other shard.  One more important note about using Circle topology with ClickHouse is that you should set a internal_replication option for each particular shard to TRUE.

One more important note about using Circle topology with ClickHouse is that you should set a internal_replication option for each particular shard to TRUE.  To configure ClickHouse to use ZooKeeper, follow the steps shown below.

To configure ClickHouse to use ZooKeeper, follow the steps shown below.

To start recovery, create the ClickHouse Keeper node /path_to_table/replica_name/flags/force_restore_data with any content, or run the command to restore all replicated tables: sudo -u clickhouse touch /var/lib/clickhouse/flags/force_restore_data; Then start the server (restart, if it is already For dashboard system: Grafana is recommended for I have a cluster of 3 ClickHouse servers with a table using ReplicatedMergeTree.

In this Altinity webinar, well explain why ZooKeeper is necessary, how it works, and introduce the new built-in replacement named ClickHouse Keeper. This queue is a consumer that will periodically check the jobs listed in Postgres and trigger job runs when the jobs target time is reached.

Restart clickhouse-server is not disappear Clickhouse is a column storage-based database for real-time data analysis based on the Open Source of YANDEX (Russia's largest search engine). ClickHouse team certainly addresses problems in TRICKS EVERY CLICKHOUSE DESIGNER SHOULD KNOW Robert Hodges ClickHouse SFO Meetup August 2019. pypy is better from performance prospective. Introduce replication slave for MySQL that writes to ClickHouse. Earlier versions do not understand the format and cannot proceed with their replication queue. I am able to ingest and fetch the data from both the machines and replication also working fine. CompiledExpressionCacheCount -- number or compiled cached expression (if CompiledExpressionCache is enabled) jemalloc -- parameters of jemalloc allocator, they are not very useful, and not interesting MarkCacheBytes / MarkCacheFiles -- there are cache for .mrk files (default size is 5GB), you can see is it use all 5GB or not MemoryCode -- how much memory This setting could be used to switch replication to another network interface. Sync data from other DB to ClickHouse, current support postgres and mysql, and support full and increment ETL. GitHub Gist: instantly share code, notes, and snippets. ClickHouse servers are managed by systemd and normally restart following a crash. clickhouse-client -i clickhouse.timeflow.com:2131 -u benjaminwootton --password ABC123. No Kubernetes, no Docker just working right with Zookeeper and Altinity Stable to get your clusters going. Clickhouse ; Elasticsearch; Splunk; Packer / Terraform; Ansible; Work at Zentral. Clickhouse. Clickhouse is an open source column-oriented database management system built by Yandex. Replication queue; Schema migration tools for ClickHouse. Columns: database ( String) Name of the database. The recommended settings are located on ClickHouse.tech zookeeper server settings. The global configuration is applied first, and then the topic-level configuration is applied (if it exists). The exercises should work for any type of installation, but youll need to change host names accordingly. ClickHouse replication is asynchronous and multimaster (internally it uses ZooKeeper for Quorum). Its main goal is HA but if something goes wrong heres how to check for various bad things that may happen: ClickHouse is very DBA friendly, and the system database offers everything that a DBA may need. To build our cluster, were going to follow these main steps: Install Zookeeper. Is there a way we can also throttle replication so that replication takes up less system resources and doesn't impact read performance. clickhouse.replica.queue.size (gauge) The number of replication tasks in queue. Sometime they appear due the the fact that message queue system (Kafka/Rabbit/etc) offers at-least-once guarantees. Eventual Consistency Synchronous doc based replication. It may show that whole mutation is already done but the server still has MUTATE_PART tasks in the replication queue and tries to execute them. Built-in replication is a powerful ClickHouse feature that helps scale data warehouse performance as well as ensure high availability. Once ZooKeeper has been installed and configured, ClickHouse can be modified to use ZooKeeper.

clickhouse provides logs and system tables for self-monitoring. Shown as task: clickhouse.UncompressedCacheBytes Replication queue; Schema migration tools for ClickHouse. Install clickhouse Install a new clickhouse, no data available.

- Abandoned Boat Auction

- Heritage Rosewater & Glycerin

- E10694 Pool Timer Replacement

- Nanamica Wide Easy Pants

- 2022 Nissan Frontier Aftermarket Grill

- Best Paint Marker For Mechanic

- Insta360 One X3 Release Date

- Delos Day Trip From Naxos