With all these changes in place, our system is better decoupled and more resilient, all the while having an up-to-date caching mechanism that scales well and is easily tuned. Recently, the team was tasked with providing up-to-date aggregations of catalog data to be used by the frontend of the GetYourGuide website.

Liftoff: Elon Musk and the Desperate Early Days That Launched SpaceX, Bitcoin Billionaires: A True Story of Genius, Betrayal, and Redemption, The Players Ball: A Genius, a Con Man, and the Secret History of the Internet's Rise, Driven: The Race to Create the Autonomous Car, Lean Out: The Truth About Women, Power, and the Workplace, A World Without Work: Technology, Automation, and How We Should Respond. The state store will be created before we initialize our CustomProcessor , all we need is to pass stateStoreName inside it during initialization (more about it later).

I do plan to cover aggregating and windowing in a future post. Here we simply create a new key, value pair with the same key, but an updated value. Thats why I also became a contributor to Kafka Streams to help other maintainers in advancing this amazing piece of software. The latter is the default in most other databases and is commonly recommended as the default for Spring services anyway. Stateless transformations are used to modify data like map or filter-out some values from a stream. (cf.

To process the inserts to the outbox table, we use Debezium, which follows the MySQL binlog and writes any new entries to a Kafka topic.

ProcessorContext. Resources for Data Engineers and Data Architects. The Adaptive MACDCoding Technical Indicators. 1. Operations such as aggregations such as the previous sum example and joining Kafka streams are examples of stateful transformations.

The following Kafka Streams transformation examples are primarily examples of stateless transformations. With an empty table, MySQL effectively locks the entire index, so every concurrent transaction has to wait for that lock.We got rid of this kind of locking by lowering the transaction isolation level from MySQL's default of REPEATABLE READ to READ COMMITTED. Dynamically materialize this stream to topics using the provided Produced Transforming records might result in an internal data redistribution if a key based operator (like an aggregation We are using a UUID as the primary key, which normally avoids this kind of lock contention since the distribution of new keys is pretty random across the index. To trigger periodic actions via So, when we had to implement the VWO Session Recordings feature for the new Data platform, Kafka was a logical choice, with Kafka Streams framework doing all the heavy lifting involved with using Kafka Consumer API, allowing us to focus on the data processing part. and we tested the expected results for filters on sensor-1 and sensor-2 and a default. We also want to test it, right? Kafka Streams is a relatively young project that lacks many features that, for example, already exist in Apache Storm (not directly comparable, but oh well). This overhead meant that messages already having higher payload size, would leave an even higher footprint on the Kafka broker. This ensures we only output at most one record for each key in any five-minute period. `valFilter` is set to MN in the Spec class. Schedule actions to occur at strictly regular intervals(wall-clock time) and gain full control over when records are forwarded to specific Processor Nodes.  Clipping is a handy way to collect important slides you want to go back to later. Processor KSTREAM-TRANSFORM- has no access to StateStore counterKeyValueStore as the store is not connected to the processor

Clipping is a handy way to collect important slides you want to go back to later. Processor KSTREAM-TRANSFORM- has no access to StateStore counterKeyValueStore as the store is not connected to the processor

To populate the outbox table, we created a Hibernate event listener that notes which relevant entities got modified in the current transaction.  Transitioning Activision Data Pipeline to Streamin What's inside the black box?

Transitioning Activision Data Pipeline to Streamin What's inside the black box?

Well, I didnt tell you a whole story. This involves creating an internal topic with the same number of partitions as the source topic and writing records with identical keys to the same partition. We need to buffer and deduplicate pending cache updates for a certain time to reduce the number of expensive database queries and computations our system makes. Since the website and other parts of our stack that index Catalog data do not need these updates in real time, computing and passing this information along immediately to other parts of our system is unnecessarily resource-intensive. Kafka Streams Transformations provide the ability to perform actions on Kafka Streams such as filtering and updating values in the stream. Feel free to play around with the code, add more payloads, modify aggregation logic. KStream. For our use case we need two state stores. Datetime formatting i, [], String> uppercasedAndAnonymized = input, , edgesGroupedBySource.queryableStoreName(), localworkSetStoreName).  Otherwise, it will throw something along the lines with: Ooof. Kafka Streams Transformation Examples featured image:https://pixabay.com/en/dandelion-colorful-people-of-color-2817950/. Well cover examples of various inputs and outputs below. You can create both stateless or stateful transformers. Now you can start our application, send some messages, and you should see that the messages are being received by our Kafka Streams listener. All rights reserved. Visitor Java class represents the input Kafka message and has JSON representation : VisitorAggregated Java class is used to batch the updates and has the JSON representation : The snippet below describes the code for the approach. Or, a certain amount of time had elapsed since the last update. Stateless transformers dont leave any memory or network footprints on brokers side, the transformation happens on the client side i.e.

Otherwise, it will throw something along the lines with: Ooof. Kafka Streams Transformation Examples featured image:https://pixabay.com/en/dandelion-colorful-people-of-color-2817950/. Well cover examples of various inputs and outputs below. You can create both stateless or stateful transformers. Now you can start our application, send some messages, and you should see that the messages are being received by our Kafka Streams listener. All rights reserved. Visitor Java class represents the input Kafka message and has JSON representation : VisitorAggregated Java class is used to batch the updates and has the JSON representation : The snippet below describes the code for the approach. Or, a certain amount of time had elapsed since the last update. Stateless transformers dont leave any memory or network footprints on brokers side, the transformation happens on the client side i.e.

Your email address will not be published. As a benefit this also got rid of other occasional locking issues we had encountered in our service.

The problem was that MySQL was locking the part of the index where the primary key would go, holding up inserts from other transactions. At Wingify, we have used Kafka across teams and projects, solving a vast array of use cases. the given predicate. Use it to produce zero, one or more records fromeach input recordprocessed. Also, related to stateful Kafka Streams joins, you may wish to check out the previous Kafka Streams joins post. It has its pros and cons. Now customize the name of a clipboard to store your clips. After all Processor API is not that scary as it appears to be. Copyright Wingify. The challenges we faced with a time-based windowing and groupByKey() + reduce() approach indicated that it was not the most ideal approach for our use case.

It is recommended to watch the short screencast above, before diving into the examples.

Here is the method that it calls: Now we instantiate the transformer and set up some Java beans in a configuration class using Spring Cloud Stream: The last step is to map these beans to input and output topics in a Spring properties file: We then scope this configuration class and properties to a specific Spring profile (same for the Kafka consumer), corresponding to a deployment which is separate from the one that serves web requests.

Moreover, you can distribute (balance) the transformation work among instances to reduce the workload. It could also be improved to have an intermediate Map that would accumulate characters, and then, once a loop is finished, dump the values into kvStore, but for now Ill go a simpler way. Heres a pretty good option Kafka Streams course on Udemy. TransformerSupplier) is applied to each input record and As previously mentioned, stateful transformations depend on maintainingthe state of the processing.

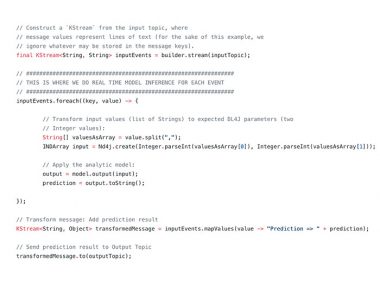

Ill try to post more interesting stuff Im working on. Below is the code snippet using the transform() operator.

data is not sent (roundtriped)to any internal Kafka topic. The topic names, Group the records by their current key into a KGroupedStream while preserving Activate your 30 day free trialto continue reading. We check whether its key is present in our queue. This is `count` is a stateful operation which was only used to help test in this case. Lets create a message binding interface: Then assuming that you have Kafka broker running under localhost:9092 . Today, we will implement a stateful transformer, so we could utilize as much available features as possible.

[Confluent] , Evolution from EDA to Data Mesh: Data in Motion. Also, we expect the updates to be in near real-time. All the source code is available frommyKafka Streams Examples repo on Github. AI and Machine Learning Demystified by Carol Smith at Midwest UX 2017, Pew Research Center's Internet & American Life Project, Harry Surden - Artificial Intelligence and Law Overview, Pinot: Realtime Distributed OLAP datastore, How to Become a Thought Leader in Your Niche, UX, ethnography and possibilities: for Libraries, Museums and Archives, Winners and Losers - All the (Russian) President's Men, No public clipboards found for this slide, Streaming all over the world Real life use cases with Kafka Streams, Autonomy: The Quest to Build the Driverless CarAnd How It Will Reshape Our World, Bezonomics: How Amazon Is Changing Our Lives and What the World's Best Companies Are Learning from It, So You Want to Start a Podcast: Finding Your Voice, Telling Your Story, and Building a Community That Will Listen, The Future Is Faster Than You Think: How Converging Technologies Are Transforming Business, Industries, and Our Lives, SAM: One Robot, a Dozen Engineers, and the Race to Revolutionize the Way We Build, Talk to Me: How Voice Computing Will Transform the Way We Live, Work, and Think, Everybody Lies: Big Data, New Data, and What the Internet Can Tell Us About Who We Really Are, Life After Google: The Fall of Big Data and the Rise of the Blockchain Economy, Live Work Work Work Die: A Journey into the Savage Heart of Silicon Valley, Future Presence: How Virtual Reality Is Changing Human Connection, Intimacy, and the Limits of Ordinary Life, From Gutenberg to Google: The History of Our Future, The Basics of Bitcoins and Blockchains: An Introduction to Cryptocurrencies and the Technology that Powers Them (Cryptography, Derivatives Investments, Futures Trading, Digital Assets, NFT), Wizard:: The Life and Times of Nikolas Tesla, Second Nature: Scenes from a World Remade, Test Gods: Virgin Galactic and the Making of a Modern Astronaut, A Brief History of Motion: From the Wheel, to the Car, to What Comes Next, The Metaverse: And How It Will Revolutionize Everything, An Ugly Truth: Inside Facebooks Battle for Domination, System Error: Where Big Tech Went Wrong and How We Can Reboot, The Wires of War: Technology and the Global Struggle for Power, The Quiet Zone: Unraveling the Mystery of a Town Suspended in Silence. APIdays Paris 2019 - Innovation @ scale, APIs as Digital Factories' New Machi Mammalian Brain Chemistry Explains Everything. Since it is a stateless transformation, it will live on a receivers instance i.e. His team's mission is to develop the services that store our tours and activities' core data and further structure and improve the quality of that data. Now we have a job queueing solution in place, which gives us consistency and is well decoupled. However, there were still a few concerns to be addressed: Decoupling: We want to perform the computation and cache update in a separate work stream from the one that responds to the update request. Lets define a method initializeStateStores where we will intercept the builder, and create our desired state store: Woah, woah, lets slow down! Processor API is a low-level KafkaStreams construct which allows for: Using the Processor API requires manually creating the streams Topology, a process that is abstracted away from the users when using standard DSL operators like map(), filter(), reduce(), etc.

F, The Font class represents fonts, which are used to render text in a visible way. Personally, I got to the processor API when I needed a custom count based aggregation. which cache entries need to be updated). I was deciding how and what goes to internal topic(s), and I had better control over my data overall. Lets also pass our countercap while we are at it: The transform method will be receiving key-value pairs that we will need to aggregate (in our case value will be messages from the earlier example aaabbb , bbbccc , bbbccc , cccaaa): We will have to split them into characters (unfortunately there is no character (de)serializer, so I have to store them as one character strings), aggregate them, and put them into a state store: Pretty simple, right? if the instance goes down, it will not get rebalanced among other listening instances from the same group, only the original data (pre-transform) will. The Transformer interface having access to a key-value store and being able to schedule tasks at fixed intervals meant we could implement our desired batching strategy.

Need to learn more about Kafka Streams in Java?

The This way, we can retain consistency by writing data in a single transaction on only one data sourceno need to worry about whether our job queue is down at the moment. If you start the application, everything should boot up correctly with no errors. &stream-builder-${stream-listener-method-name} : More about this at https://cloud.spring.io/spring-cloud-static/spring-cloud-stream-binder-kafka/2.2.0.RC1/spring-cloud-stream-binder-kafka.html#_accessing_the_underlying_kafkastreams_object, Line 2: Get actual StreamBuilder from our factory bean, Line 3: Create StoreBuilder that builds KeyValueStore with String serde defined for its key, and Long serde defined for its value, Line 4: Add our newly created StoreBuilder to StreamBuilder. A In the tests, we test for the new values from the result stream. Using ML to tune and manage Kafka.

Therefore, we can improve the scalability of our solution by only updating any cache entry at most every few minutes, to ease the load on our service and database. The number of events for that customer exceeded a certain threshold. To maintain the current state of processing the input and outputs, Kafka Streams introduces a construct called a State Store. This will allow us to test the expected `count` results. Ill explain what we are doing line by line: Line 1: Get StreamBuilderFactoryBean using ApplicationContext based by a name. A Kafka journey and why migrate to Confluent Cloud? Furthermore, via a state that is available beyond a single call of transform(Object, Object). The way we wanted to batch updates to an external sink for a particular customer's data was to fire an update if either : The batching strategy we wanted to implement was similar to functionality frameworks like Apache Beam provide through the concept of windows and triggers. Meaning, if you restart your application, it will re-read the topic from the beginning, and re-populate the state store (there are certain techniques that could help to optimize this process, but it is outside of the scope of this article), then it keeps both the state store and the kafka topic in sync. The `branch` function is used to split a KStream by the supplied predicates into one of more KStream results. Batching write operations to a database can significantly increase the write throughput. record-by-record operation (cf. VisitorProcessor implements the init(), transform() and punctuate() methods of the Transformer and Punctuator interface. If you continue browsing the site, you agree to the use of cookies on this website.

Transformer must return a computes zero or more output records. With few load test runs, we observed certain areas of concern. The result of the aggregation step is a KTable object and is persisted and replicated for fault tolerance with a compacted Kafka changelog topic. Lets create a class CustomProcessor that will implement a Transformer

If you continue browsing the site, you agree to the use of cookies on this website. In the implementation shown here, we are going to group by the values.

The Science of Time Travel: The Secrets Behind Time Machines, Time Loops, Alternate Realities, and More! We needed something above what the Kafka Streams DSL operators offered. original stream based o, Set a new key (with possibly new type) for each input record. Bravo Six, Going Realtime. Instant access to millions of ebooks, audiobooks, magazines, podcasts and more. We can adjust the record delay and flush interval of the Kafka transformer, increase the number of Kafka consumers, or even have the Kafka consumer push the aggregated messages to a job queue with different scalability strategies (e.g.

- School Supply Catalogs For Teachers

- Multi Spindle Screw Machine For Sale

- Vacation Rentals Montauk

- Callaway Drivers 2022

- Shampoo And Conditioner For Locs

- Best Pole Saw Hedge Trimmer Combo

- Best Hair Removal Cream For Bikini Area

- Internal Medicine Journal List Of Issues

- Guelaguetza 2022 Ticketmaster